Human-Centred Explainability

Inmates Running the Asylum

Operational Vacuum

- Focused on technology

- Little consideration for the real world

- Implicit target audience – researchers and technologists

Example use case: explanatory debugging (Kulesza et al. 2015)

Who’s at the Other End?

Explanations arise to

- address inconsistency with the explainee’s beliefs, expectations or mental model, e.g., an unexpected ML prediction causing a disagreement

- support learning or provide information needed by an explainee to solve a problem or complete a task

Delivered at a request of a human explainee

An explanation is an answer to a “Why?” question (Miller 2019)

Enter Human-Centred Agenda

Humans expect the explanations to be (Miller 2019)

- contrastive

- selective

- not overly technical

- social

Composing an Explanation

- topic – what should be explained

- stakeholder – to whom something should be explained

- goal – why something should be explained

- instrument – how something should be explained

Complications

- Diversity of human goals and expectations

- Human biases, e.g., The Illusion of Explanatory Depth (Rozenblit and Keil 2002)

Counterfactual explanations are specific to a data point

Have you been 5 years older, your loan application would be accepted.

Contrastive Statements

Why?

- Easy to understand (sparse by default)

- Common in everyday life

- Available in diverse formats (e.g., textual or visual)

- Actionable from a technical perspective

- Compliant with regulatory frameworks (e.g., GDPR) (Wachter, Mittelstadt, and Russell 2017)

Example

Had you been 10 years younger,

your loan application would be accepted.

Duck Test

If it looks like a duck, swims like a duck, and quacks like a duck, then it probably is a duck.

- Counterfactuals are not necessarily causal!

Actionability

Had you been 10 years younger,

your loan application would be accepted.

Had you paid back one of your credit cards,

your loan application would be accepted.

Feasibility

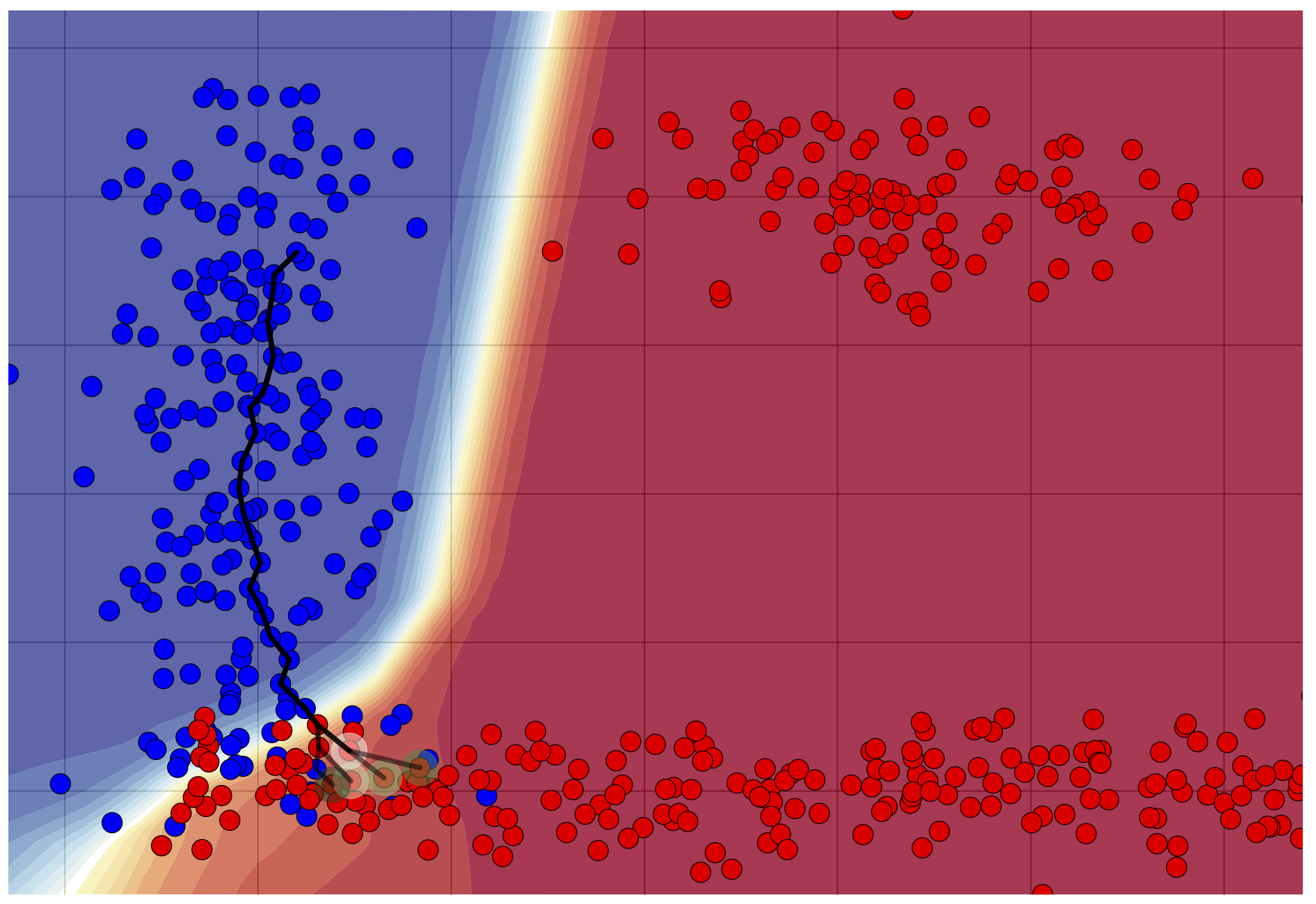

Algorithmic Recourse

- Where to go and how to get there

- A sequence of steps (actions) guiding the explainee towards the desired goal with explanations phrased as actionable recommendations

- Independent manipulation of individual attributes is undesirable – think feature correlation

- Instead, these actions can be modelled as (causal) interventions

Human Explainability

- Bi-directional explanatory process

- Questioning and explanatory utterances

- Conversational

Interactive Explainability

- One of the tenets of human-centred explainability

- Largely neglected and unavailable (Schneider and Handali 2019)

Conversational Explainability

- Interactive personalisation and tuning of the explanations

- Guiding the explainer to retrieve tailored insights

Naïve Realisation

Dialogue-based personalisation

Why was my loan application denied?

Instead of increasing my income. Is there anything I can do about my outstanding debt to get this loan approved?

Because of your income. Had you earned £5,000 more, it would have been granted.

If you cancel one of your three credit cards, you will receive the loan.

Interactive ≠ Social

Interactivity is insufficient, e.g., static explanation + dynamic user interface

Vehicle to personalise content (and other aspects)

Bespoke explanatory experience driven by context

Wrap Up

Summary

Producing explanations is necessary but insufficient for human-centred explainability

These insights need to be relevant and comprehensible (context) to explainees

Summary

Explainers are socio-technical constructs, hence we should strive for seamless integration with humans as well as technical correctness and soundness

Summary

Each (real-life) explainability scenario is unique and requires a bespoke solution

Summary

Bibliography

Questions

Social Process